Across many organisations, a quiet trend is gaining speed: employees are increasingly using free AI tools without approval or oversight. What began as a clever way to boost productivity has quickly turned into a blind spot for risk, with staff tapping into publicly available AI models, often unaware of the potential consequences.

This isn’t just a tech issue. It’s a shift in workplace behaviour that’s forcing IT and security teams to rethink how AI fits into the wider digital environment. It’s something we at Synergy have seen play out over time.

This trend affects all parts of an organisation:

Unapproved Tools Are Everywhere

From marketing teams using free text generators, to developers relying on open-source code assistants, AI tools are being used across departments, and often under the radar. With no central tracking, it’s impossible to know what tools are being used, what data is being entered, or where that information ends up.

Sensitive Data Could Be Leaking Out

Most free AI tools aren’t built with enterprise security in mind. When employees enter internal documents, client details, or proprietary code into these platforms, that data could be stored, analysed, or even used to train future models. Without realising it, your people could be exposing critical business information.

Not All Models Are Created Equal

AI models don’t all treat your data the same way. Some free or public models collect user inputs to help improve the system overall, which means anything your team types, like client details or internal plans, could be used to train the next version of the model. In contrast, enterprise AI models can be configured to work entirely within your organisation, using only your company’s data and keeping it securely contained. These internal models don’t share or learn from your inputs across other users, which makes them far safer for handling sensitive or regulated information.

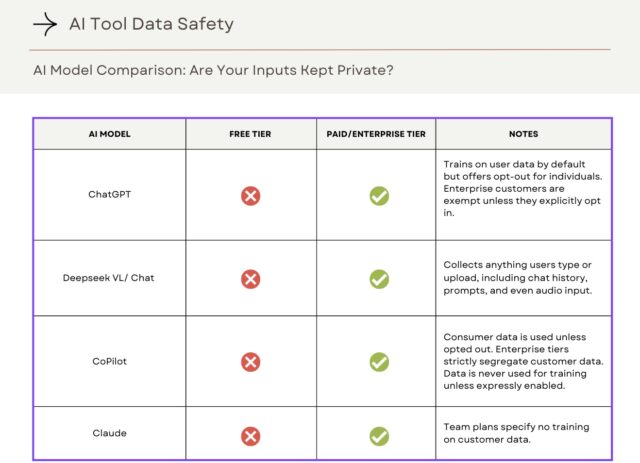

Knowing which type of model you’re using and how it handles your data is essential for staying compliant and protecting your business. We have created a table below to highlight the differences between the paid an unpaid versions of the tools, and the differences between them.

It’s Time for a Smarter Approach to AI Use

To get ahead of the risk, businesses need to formalise how AI is used internally. That means building clear guidelines, providing approved tools that meet security standards, and educating teams on what’s safe and what’s off-limits.

With the right infrastructure, including enterprise AI platforms designed for secure use, organisations can enable innovation without opening themselves up to avoidable risks. These solutions offer encryption, permissions control, and full visibility, giving IT teams the power to govern AI usage effectively.

Is Your Business Ready for the AI Era?

AI isn’t going anywhere and neither is the desire for teams to use it to work smarter. The question is no longer if your people will turn to AI, but whether you’ve put the right boundaries and support in place to guide them safely. With multiple AI tools available, each with their own nuances, it is important to know their stances on using inputs to train their models. We have created a table showing four popular AI tools and the extent to which they can use the data used in them to train the model.

Need Help Creating a Safe AI Strategy?

We work with businesses to audit AI use, reduce exposure, and design governance frameworks that support safe, compliant innovation. Whether you need help setting policies or rolling out enterprise-grade AI tools, we’ll help you take control of AI before it becomes a business risk.

Reach out to Synergy today to learn how we can help you turn AI from a risk into a strategic advantage.

Share: